How to Save Scraped Data in to Googlesheet Using Python

How to Save Scraped Data in to Googlesheet Using Python

Send download link to:

Google spreadsheet is a very popular tool to save data in a tabular form similar to Excel. The ability to store it on google drive and share across multiple people makes it the most popular choice. In our previous tutorial we have seen how to save data to Excel and MySQL Database. In this tutorial we will see how to Save Scraped Data in to Googlesheet directly.

To access our spreadsheet using python we need to use Google Drive API provided by Google. To enable Drive API follow below steps:

- Go to the Google APIs Console.

- Create a new project.

- Click Enable API. Search for and enable the Google Drive API.

- Create credentials for a Web Server to access Application Data.

- Name the service account and grant it a Project Role of Editor.

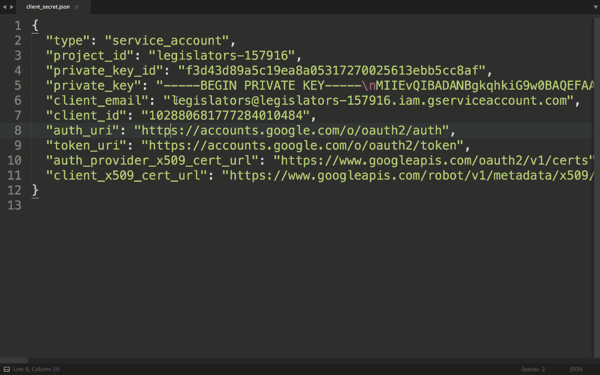

- Download the JSON file.

- Copy the JSON file to your code directory.

There is one last required step to authorize your app, and it’s easy to miss!

Find the client_email inside client_secret.json. Back in your spreadsheet, click the Share button in the top right, and paste the client email into the People field to give it edit rights. Hit Send.

If you skip this step, you’ll get a gspread.exceptions.SpreadsheetNotFound error when you try to access the spreadsheet from Python.

Now we are all set to use Google Drive API. We will use 3 libraries gspread (https://gspread.readthedocs.io/en/latest/), oauth2client (https://pypi.org/project/oauth2client/) and df2gspread (https://pypi.org/project/df2gspread/).

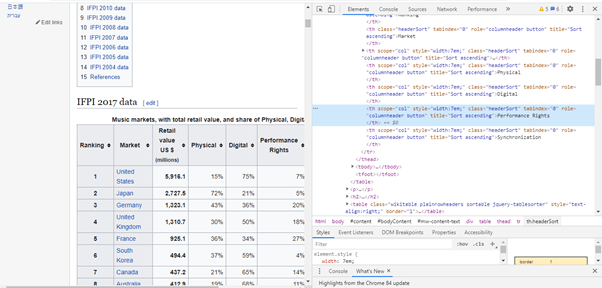

Let’s go to this Wikipedia page https://en.wikipedia.org/wiki/List_of_largest_recorded_music_markets and scrape data in year 2017 table:

See the complete code below or watch the video for detailed explanation:

#To read downloaded json file from google api

import json

with open('mdrive.json') as f:

data = json.load(f)

#Install libraries

pip install gspread oauth2client df2gspread

import gspread

from oauth2client.service_account import ServiceAccountCredentials

# use creds to create a client to interact with the Google Drive API

scope = ['https://spreadsheets.google.com/feeds','https://www.googleapis.com/auth/drive','https://www.googleapis.com/auth/drive.file','https://www.googleapis.com/auth/spreadsheets']

creds = ServiceAccountCredentials.from_json_keyfile_name('mdrive.json', scope)

client = gspread.authorize(creds)

# Find a workbook by name and open the first sheet

# Make sure you use the right name here.

sheet = client.open("webscrap").sheet1

spreadsheet_key = 'yourkey’

#Start Scraping

import bs4

import urllib.request

from urllib.request import urlopen

from bs4 import BeautifulSoup as soup

html = urlopen('https://en.wikipedia.org/wiki/List_of_largest_recorded_music_markets')

bsobj = soup(html.read())

tbody = bsobj('table',{'class':'wikitable plainrowheaders sortable'})[0].findAll('tr')

xl = []

for row in tbody:

cols = row.findChildren(recursive = False)

cols = [element.text.strip() for element in cols]

xl.append(cols)

#Save to pandas dataframe

import pandas as pd

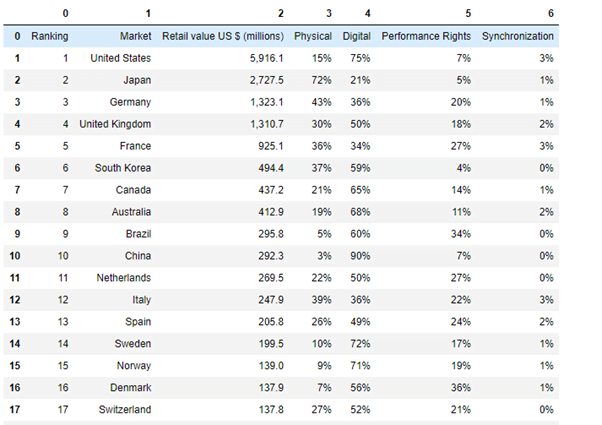

df = pd.DataFrame(data = xl)

Output:

#Write from pandas data frame to google spreadsheet using df2gspread

from df2gspread import df2gspread as d2g

wks_name = 'Sheet1'

cell_of_start_df = 'A1'

d2g.upload(df,

spreadsheet_key,

wks_name,

credentials=creds,

col_names=False,

row_names=False,

start_cell = cell_of_start_df,

clean=False)Hope this tutorial is helpful for you, if any query then contact us. Our data scraping services can provide data in to all type of format as per need like CSV, XLS, JSON or Save Scraped Data in to Googlesheet or upload on your server.